- Print

- DarkLight

- PDF

How to copy data from On-premises data store to an Azure data store with Azure Data Factory?

- Print

- DarkLight

- PDF

We learnt in the previous article that Azure Data Factory is a Microsoft cloud based ETL service, that we can use it to Extract, Transform and Load of the data hosted in local and cloud data stores, in a way that meets the business requirements.

In this article, we will see how to copy data from a SQL Server instance hosted in an on-premises server into Azure blob container in Azure Data Factory.

Prerequisites

Azure Subscription.

Azure Data Factory.

SQL Server installed in an on-premises server.

Self-hosted IR installed and configured in the SQL Server machine.

Configure the Copy Activity

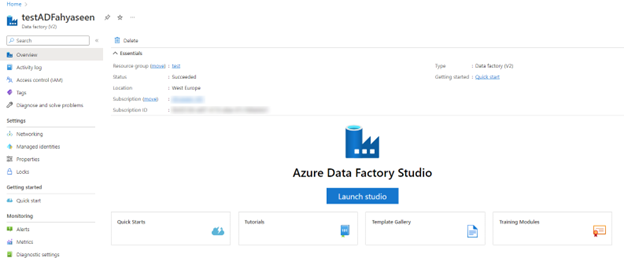

Open the Azure Portal using your Internet Browser and search for your Azure Data Factory service.

In the Azure Data Factory, click Launch Studio to open the Azure Data Factory Studio:

- In the Azure Data Factory Studio, click in the pencil icon to start designing the pipeline:

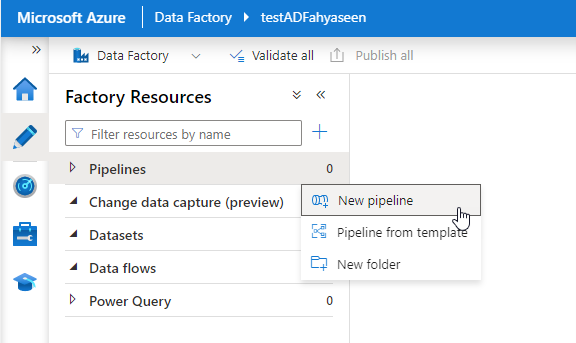

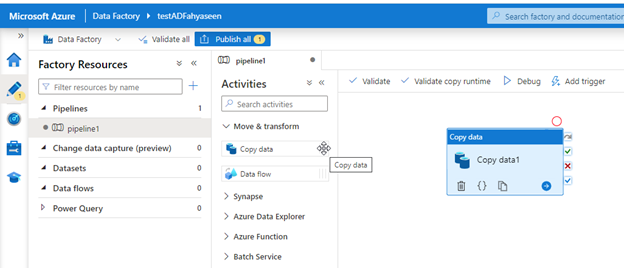

- In the Author window, click beside the Pipelines list and choose to create a New Pipeline:

- From the list of activities, drag the Copy Data activity to the design pane:

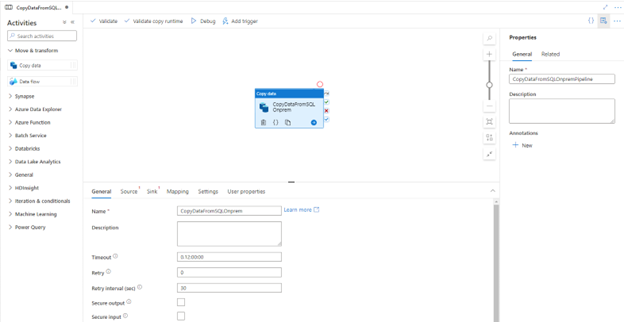

- You can start by providing a name for the Pipeline and the Copy activity as below:

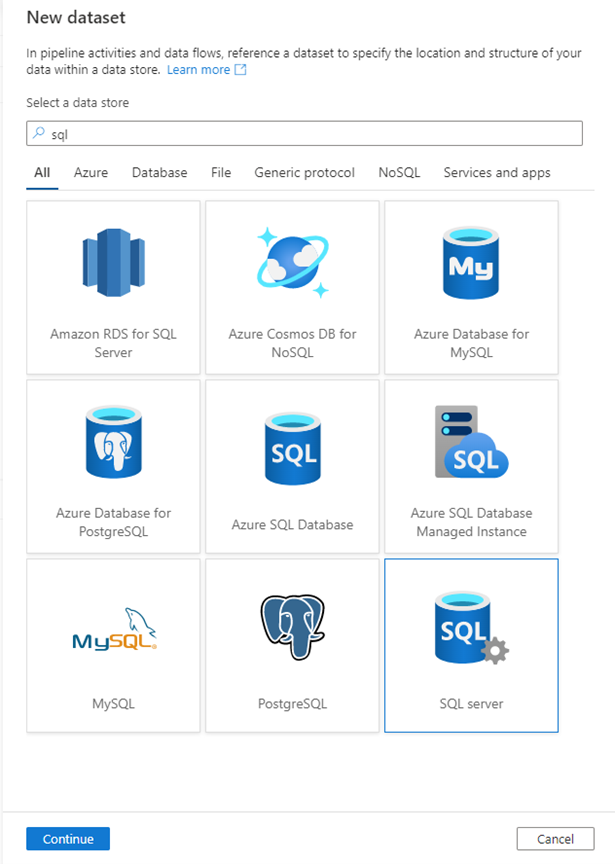

- In the copy activity source, click + New to create a new dataset and linked service to point to the On-premises SQL Server. First you need to choose SQL Server as a dataset type:

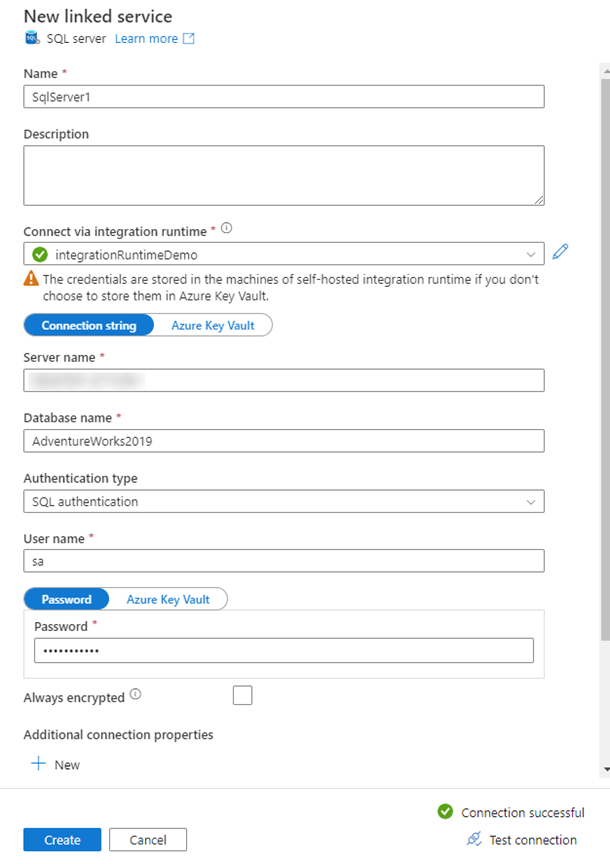

- In the New Linked Service window, provide the name of the on-premises SQL Server, Database name and the credentials that will be used to connect to the SQL Server, taking into consideration that you choose the SH-IR that we created in the previous article in the Connect Via IR option. Click Test Connection to make sure that the configurations are correct:

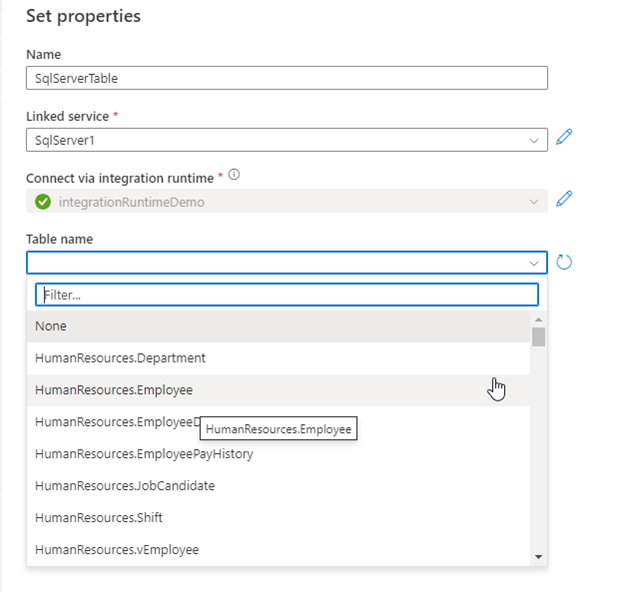

- In the Datasert properties, choose the table from which you will pull the data:

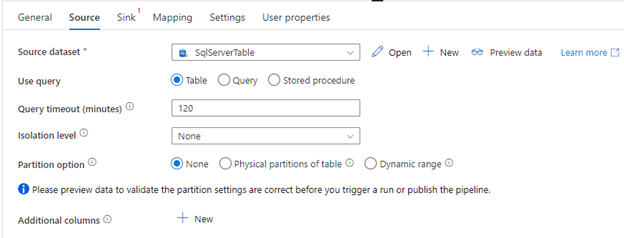

Take into consideration that you can provide the query or the stored procedure that will be used to pull custom data from multiple tables instead of providing the table name:

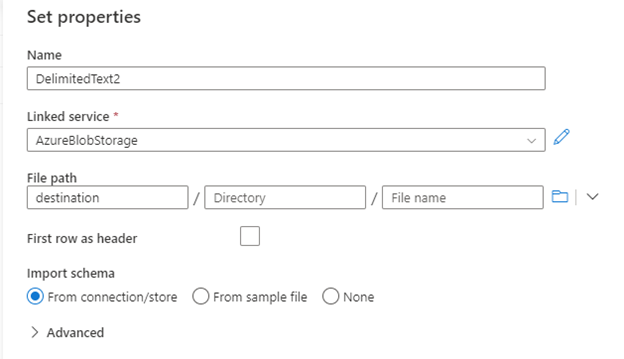

- Perform the same configurations step to configure the Azure Blob Storage container to act as a sink for the copy activity.

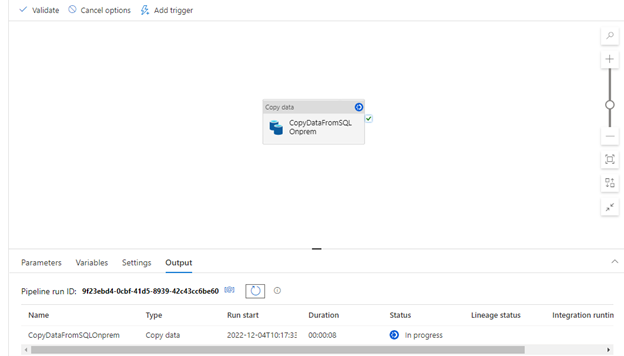

- To start the copy process, click on the Debug button and monitor the copy progress in the Output window:

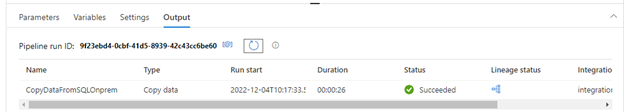

- You can confirm that the copy process completed successfully from the Output window as below: